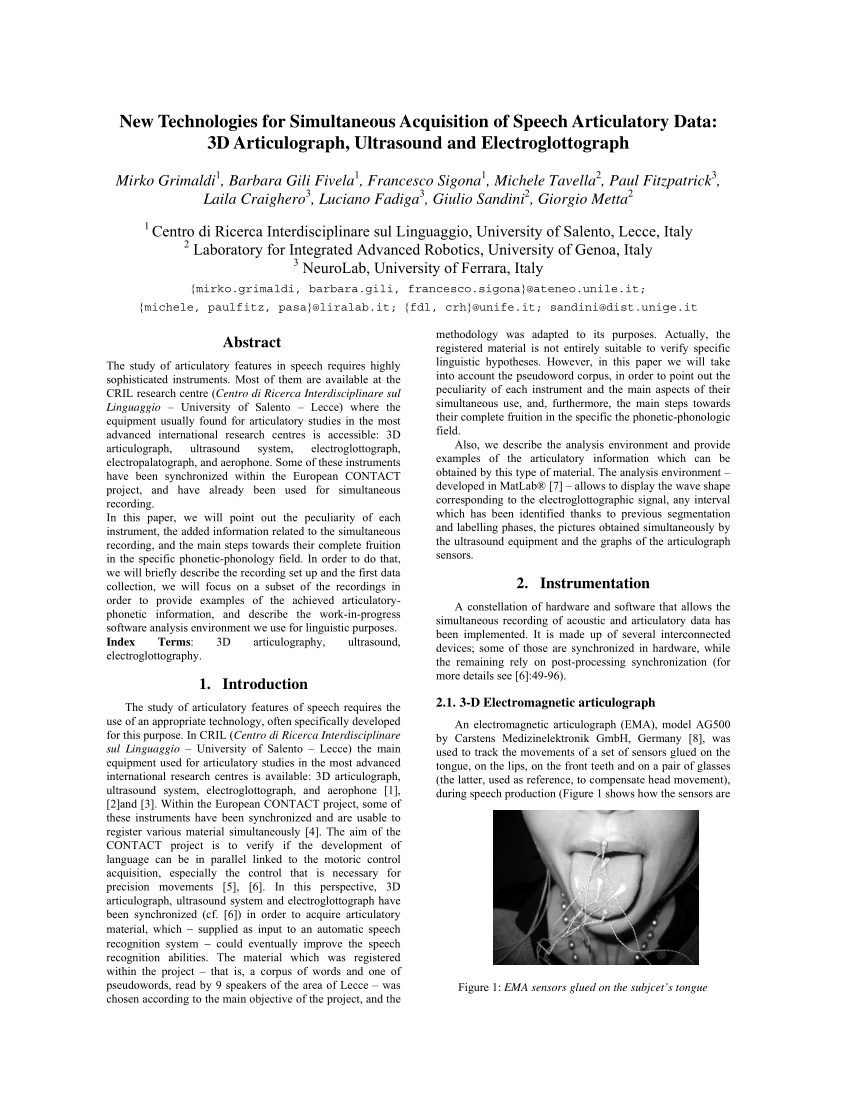

PDF) New Technologies for Simultaneous Acquisition of Speech Articulatory Data: 3D Articulograph, Ultrasound and Electroglottograph

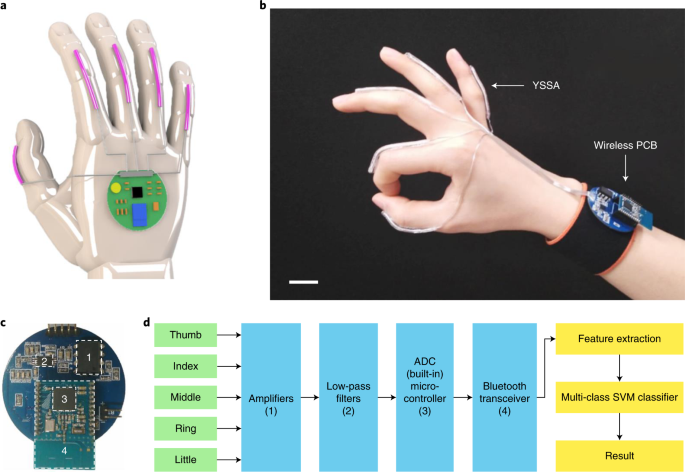

Sign-to-speech translation using machine-learning-assisted stretchable sensor arrays | Nature Electronics

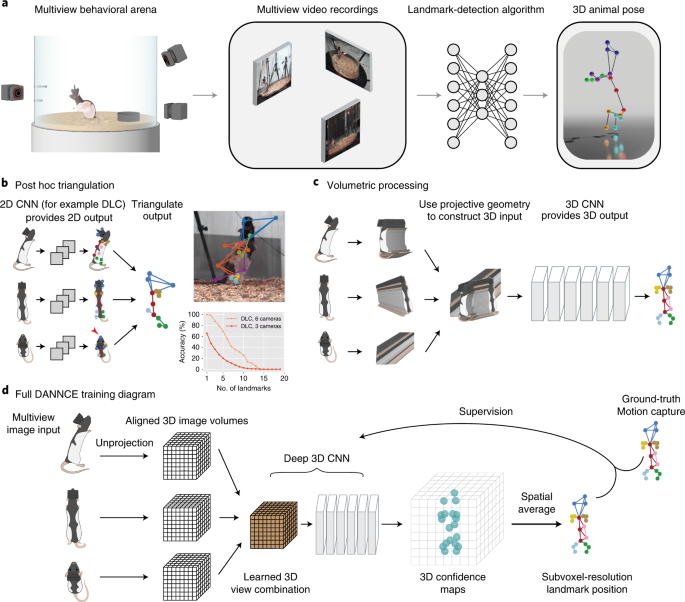

Geometric deep learning enables 3D kinematic profiling across species and environments | Nature Methods

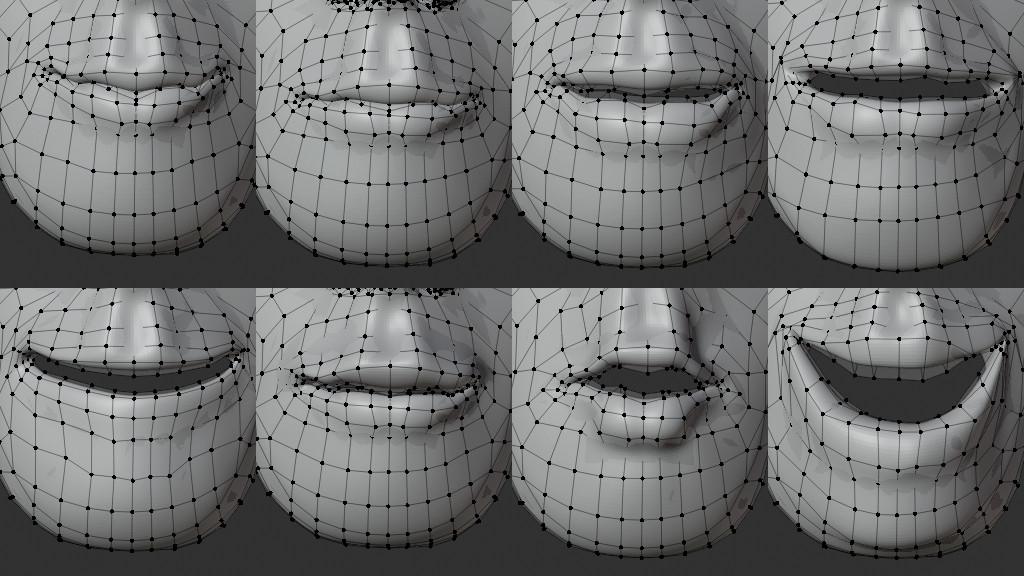

GitHub - sibozhang/Speech2Video: Code for ACCV 2020 "Speech2Video Synthesis with 3D Skeleton Regularization and Expressive Body Poses"

WithYou: Automated Adaptive Speech Tutoring With Context-Dependent Speech Recognition – Rekimoto Lab